0.环境准备和规划

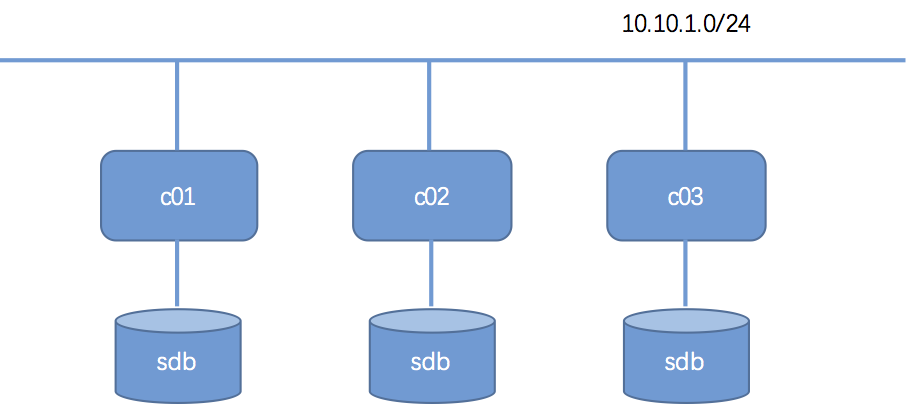

拓扑图

规划表

| ID | hostname | IP Address | disk | manager |

|---|---|---|---|---|

| 1 | c01 | 10.10.1.173 | sda:20GB;sdb:20GB | quorum-manager |

| 2 | c02 | 10.10.1.174 | sda:20GB;sdb:20GB | quorum-manager |

| 3 | c03 | 10.10.1.175 | sda:20GB;sdb:20GB | quorum |

| name | value |

|---|---|

| 集群名称 | 3NodeCluster |

| 主节点 | c01 |

| 远程命令 | ssh,scp |

| nsd配置文件 | /etc/gpfs/3node_nsd_input |

内核

[root@c01 ~]# uname -r

2.6.32-431.el6.x86_64

[root@c01 ~]# cat /etc/redhat-release

CentOS release 6.5 (Final)

1.安装部署

在三个节点安装下面的内容,这里是c01,c02,c03

关闭SELINUX

[root@c01 ~]# sed -i "s#SELINUX=enforcing#SELINUX=disabled#g" /etc/selinux/config

[root@c01 ~]# cat /etc/selinux/config |grep SELINUX=

# SELINUX= can take one of these three values:

SELINUX=disabled

修改hosts表

[root@c01 ~]# echo "10.10.1.173 c01" >> /etc/hosts

[root@c01 ~]# echo "10.10.1.174 c02" >> /etc/hosts

[root@c01 ~]# echo "10.10.1.175 c03" >> /etc/hosts

[root@c01 ~]# cat /etc/hosts

127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4

::1 localhost localhost.localdomain localhost6 localhost6.localdomain6

10.10.1.173 c01

10.10.1.174 c02

10.10.1.175 c03

准备安装包

[root@c01 ~]# yum -y install unzip

[root@c01 ~]# unzip gpfs_install-3.5.zip

# 解压安装包 --silent 直接接受许可,不再提示

[root@c01 ~]# ./gpfs_install-3.5.0-0_x86_64 --silent

Extracting License Acceptance Process Tool to /usr/lpp/mmfs/3.5 ...

tail -n +429 ./gpfs_install-3.5.0-0_x86_64 | /bin/tar -C /usr/lpp/mmfs/3.5 -xvz --exclude=*rpm --exclude=*tgz 2> /dev/null 1> /dev/null

Installing JRE ...

tail -n +429 ./gpfs_install-3.5.0-0_x86_64 | /bin/tar -C /usr/lpp/mmfs/3.5 --wildcards -xvz ./ibm-java*tgz 2> /dev/null 1> /dev/null

Invoking License Acceptance Process Tool ...

/usr/lpp/mmfs/3.5/ibm-java-x86_64-60/jre/bin/java -cp /usr/lpp/mmfs/3.5/LAP_HOME/LAPApp.jar com.ibm.lex.lapapp.LAP -l /usr/lpp/mmfs/3.5/LA_HOME -m /usr/lpp/mmfs/3.5 -s /usr/lpp/mmfs/3.5 -t 5

License Agreement Terms accepted.

Extracting Product RPMs to /usr/lpp/mmfs/3.5 ...

tail -n +429 ./gpfs_install-3.5.0-0_x86_64 | /bin/tar -C /usr/lpp/mmfs/3.5 --wildcards -xvz ./gpfs.base-3.5.0-3.x86_64.rpm ./gpfs.docs-3.5.0-3.noarch.rpm ./gpfs.gpl-3.5.0-3.noarch.rpm ./gpfs.msg.en_US-3.5.0-3.noarch.rpm 2> /dev/null 1> /dev/null

- gpfs.base-3.5.0-3.x86_64.rpm

- gpfs.docs-3.5.0-3.noarch.rpm

- gpfs.gpl-3.5.0-3.noarch.rpm

- gpfs.msg.en_US-3.5.0-3.noarch.rpm

Removing License Acceptance Process Tool from /usr/lpp/mmfs/3.5 ...

rm -rf /usr/lpp/mmfs/3.5/LAP_HOME /usr/lpp/mmfs/3.5/LA_HOME

Removing JRE from /usr/lpp/mmfs/3.5 ...

rm -rf /usr/lpp/mmfs/3.5/ibm-java*tgz

[root@c01 ~]# cd /usr/lpp/mmfs/3.5/

[root@c01 3.5]# ls -l

total 12952

-rw-r--r-- 1 root root 12405953 Jan 19 2013 gpfs.base-3.5.0-3.x86_64.rpm

-rw-r--r-- 1 root root 230114 Jan 19 2013 gpfs.docs-3.5.0-3.noarch.rpm

-rw-r--r-- 1 root root 509477 Jan 19 2013 gpfs.gpl-3.5.0-3.noarch.rpm

-rw-r--r-- 1 root root 99638 Jan 19 2013 gpfs.msg.en_US-3.5.0-3.noarch.rpm

drwxr-xr-x 2 root root 4096 Sep 27 06:31 license

-rw-r--r-- 1 root root 39 Sep 27 06:31 status.dat

[root@c01 ~]# yum -y install ksh

...

[root@c01 3.5]# rpm -ivh gpfs.base-3.5.0-3.x86_64.rpm

Preparing... ########################################### [100%]

1:gpfs.base ########################################### [100%]

[root@c01 3.5]# rpm -ivh gpfs.docs-3.5.0-3.noarch.rpm

Preparing... ########################################### [100%]

1:gpfs.docs ########################################### [100%]

[root@c01 3.5]# rpm -ivh gpfs.gpl-3.5.0-3.noarch.rpm

Preparing... ########################################### [100%]

1:gpfs.gpl ########################################### [100%]

[root@c01 3.5]# rpm -ivh gpfs.msg.en_US-3.5.0-3.noarch.rpm

Preparing... ########################################### [100%]

1:gpfs.msg.en_US ########################################### [100%]

编译和安装GPL层二进制文件

[root@c02 ~]# cd /usr/lpp/mmfs/src/

[root@c01 src]# yum -y install cpp gcc gcc-c++

...

[root@c01 src]# make LINUX_DISTRIBUTION=REDHAT_AS_LINUX Autoconfig World InstallImages \

2>/tmp/make_err 1>/tmp/make_out

# 验证

[root@c01 src]# ls -al /lib/modules/2.6.32-696.10.2.el6.x86_64/extra/

total 6016

drwxr-xr-x 2 root root 4096 Sep 27 07:08 .

drwxr-xr-x 7 root root 4096 Sep 27 07:08 ..

-rw-r--r-- 1 root root 2894349 Sep 27 07:08 mmfs26.ko

-rw-r--r-- 1 root root 2547296 Sep 27 07:08 mmfslinux.ko

-rw-r--r-- 1 root root 708128 Sep 27 07:08 tracedev.ko

配置ssh免密码

在每个节点上都要运行,比较繁琐。

# 创建密钥对

[root@c01 ~]# ssh-keygen

Generating public/private rsa key pair.

Enter file in which to save the key (/root/.ssh/id_rsa):

Enter passphrase (empty for no passphrase):

Enter same passphrase again:

Your identification has been saved in /root/.ssh/id_rsa.

Your public key has been saved in /root/.ssh/id_rsa.pub.

The key fingerprint is:

6b:e2:e6:ac:9f:9e:86:5b:74:20:c3:69:76:0d:8d:9a root@c01

The key's randomart image is:

+--[ RSA 2048]----+

| .o |

| . ..o. |

| Boo . |

| oE+ . |

| . S |

| . . . |

| .o o |

| .+o= |

| oOO |

+-----------------+

# 出现一对秘钥对

[root@c01 ~]# cat .ssh/id_rsa

id_rsa id_rsa.pub

# 把公钥复制到authorized_keys下

[root@c01 .ssh]# ssh-copy-id c01

The authenticity of host 'c01 (10.10.1.173)' can't be established.

RSA key fingerprint is 73:57:3c:94:e3:c8:62:2b:9c:0a:bc:87:a5:07:92:14.

Are you sure you want to continue connecting (yes/no)? yes

Warning: Permanently added 'c01,10.10.1.173' (RSA) to the list of known hosts.

root@c01's password:

Now try logging into the machine, with "ssh 'c01'", and check in:

.ssh/authorized_keys

to make sure we haven't added extra keys that you weren't expecting.

# 第一次都会有下面这么一串:

The authenticity of host 'c01 (10.10.1.173)' can't be established.

RSA key fingerprint is 73:57:3c:94:e3:c8:62:2b:9c:0a:bc:87:a5:07:92:14.

Are you sure you want to continue connecting (yes/no)?

如果不想不想这么麻烦,可以在配置文件里删除一下,StrictHostKeyChecking改为no即可:

[root@c01 .ssh]# cat /etc/ssh/ssh_config |grep HostKey

StrictHostKeyChecking no

[root@c01 ~]# ssh-copy-id c02

Warning: Permanently added 'c02,10.10.1.174' (RSA) to the list of known hosts.

root@c02's password:

Now try logging into the machine, with "ssh 'c02'", and check in:

.ssh/authorized_keys

to make sure we haven't added extra keys that you weren't expecting.

[root@c01 ~]# ssh-copy-id c03

Warning: Permanently added 'c03,10.10.1.175' (RSA) to the list of known hosts.

root@c03's password:

Now try logging into the machine, with "ssh 'c03'", and check in:

.ssh/authorized_keys

to make sure we haven't added extra keys that you weren't expecting.

# 测试一下

[root@c01 ~]# for i in 1 2 3;do ssh c0$i date;done

Fri Sep 29 05:19:35 CST 2017

Fri Sep 29 05:19:36 CST 2017

Fri Sep 29 05:19:36 CST 2017

但是,这么弄得话,确实比较繁琐,特别是当节点更多的情况下: 比较好的解决办法是可以使用一个nfs,手动将authorized_keys文件做好后,复制到每个节点 的.ssh目录下面即可,这样不用每次都使用ssh-copy-id命令。可以参考SSH互信配置。

2.测试

在一个节点中执行即可,这里是c01。

创建3节点GPFS集群

[root@c01 mmfs]# ./bin/mmcrcluster -N "c01:manager-quorum,c02:manager-quorum,c03:quorum" \

-p c01 -r /usr/bin/ssh -R /usr/bin/scp -C 3NodeCluster

Fri Sep 29 05:42:52 CST 2017: mmcrcluster: Processing node c01

Fri Sep 29 05:42:52 CST 2017: mmcrcluster: Processing node c02

Fri Sep 29 05:42:55 CST 2017: mmcrcluster: Processing node c03

mmcrcluster: Command successfully completed

mmcrcluster: Warning: Not all nodes have proper GPFS license designations.

Use the mmchlicense command to designate licenses as needed.

mmcrcluster: Propagating the cluster configuration data to all

affected nodes. This is an asynchronous process.

[root@c01 mmfs]# ./bin/mmlscluster

===============================================================================

| Warning: |

| This cluster contains nodes that do not have a proper GPFS license |

| designation. This violates the terms of the GPFS licensing agreement. |

| Use the mmchlicense command and assign the appropriate GPFS licenses |

| to each of the nodes in the cluster. For more information about GPFS |

| license designation, see the Concepts, Planning, and Installation Guide. |

===============================================================================

GPFS cluster information

========================

GPFS cluster name: 3NodeCluster.c01

GPFS cluster id: 5187696956017568988

GPFS UID domain: 3NodeCluster.c01

Remote shell command: /usr/bin/ssh

Remote file copy command: /usr/bin/scp

GPFS cluster configuration servers:

-----------------------------------

Primary server: c01

Secondary server: (none)

Node Daemon node name IP address Admin node name Designation

-------------------------------------------------------------------

1 c01 10.10.1.173 c01 quorum-manager

2 c02 10.10.1.174 c02 quorum-manager

3 c03 10.10.1.175 c03 quorum

# 接受许可

[root@c01 mmfs]# ./bin/mmchlicense server --accept -N c01,c02,c03

The following nodes will be designated as possessing GPFS server licenses:

c01

c02

c03

mmchlicense: Command successfully completed

mmchlicense: Propagating the cluster configuration data to all

affected nodes. This is an asynchronous process.

[root@c01 mmfs]# ./bin/mmlslicense -L

Node name Required license Designated license

-------------------------------------------------------------------

c01 server server

c02 server server

c03 server server

Summary information

---------------------

Number of nodes defined in the cluster: 3

Number of nodes with server license designation: 3

Number of nodes with client license designation: 0

Number of nodes still requiring server license designation: 0

Number of nodes still requiring client license designation: 0

创建NSD(网络共享磁盘)

[root@c01 mmfs]# mkdir -p /etc/gpfs

[root@c01 mmfs]# echo "/dev/sdb:c01::dataAndMetadata:3001:c01nsd01" >>/etc/gpfs/3node_nsd_input

[root@c01 mmfs]# echo "/dev/sdb:c02::dataAndMetadata:3002:c02nsd01" >>/etc/gpfs/3node_nsd_input

[root@c01 mmfs]# echo "/dev/sdb:c03::dataAndMetadata:3003:c03nsd01" >>/etc/gpfs/3node_nsd_input

[root@c01 mmfs]# cat /etc/gpfs/3node_nsd_input

/dev/sdb:c01::dataAndMetadata:3001:c01nsd01

/dev/sdb:c02::dataAndMetadata:3002:c02nsd01

/dev/sdb:c03::dataAndMetadata:3003:c03nsd01

[root@c01 mmfs]# ./bin/mmcrnsd -F /etc/gpfs/3node_nsd_input -v yes

mmcrnsd: Processing disk sdb

mmcrnsd: Processing disk sdb

mmcrnsd: Processing disk sdb

mmcrnsd: Propagating the cluster configuration data to all

affected nodes. This is an asynchronous process.

[root@c01 ~]# cd

[root@c01 ~]# cat /etc/gpfs/3node_nsd_input

# /dev/sdb:c01::dataAndMetadata:3001:c01nsd01

c01nsd01:::dataAndMetadata:3001::system

# /dev/sdb:c02::dataAndMetadata:3002:c02nsd01

c02nsd01:::dataAndMetadata:3002::system

# /dev/sdb:c03::dataAndMetadata:3003:c03nsd01

c03nsd01:::dataAndMetadata:3003::system

[root@c01 ~]# mmlsnsd

File system Disk name NSD servers

---------------------------------------------------------------------------

(free disk) c01nsd01 c01

(free disk) c02nsd01 c02

(free disk) c03nsd01 c03

创建GPFS文件系统

[root@c01 ~]# mmcrfs /gpfs1 /dev/gpfs1 -F /etc/gpfs/3node_nsd_input -B 256k \

-n 80 -v no -R 2 -M 2 -r 2 -m 2 -A yes -Q no

mmcommon: tsctl command cannot be executed. Either none of the

nodes in the cluster are reachable, or GPFS is down on all of the nodes.

mmcrfs: Command failed. Examine previous error messages to determine cause.

# 报错,cluster没启动

[root@c01 ~]# mmgetstate -aLs

Node number Node name Quorum Nodes up Total nodes GPFS state Remarks

------------------------------------------------------------------------------------

1 c01 0 0 3 down quorum node

2 c02 0 0 3 unknown quorum node

3 c03 0 0 3 unknown quorum node

Summary information

---------------------

mmgetstate: Information cannot be displayed. Either none of the

nodes in the cluster are reachable, or GPFS is down on all of the nodes.

# 查看并关闭所有的节点的selinux和iptables

[root@c01 ~]# getenforce

Disabled

[root@c01 ~]# service iptables stop

# 重新启动集群

[root@c01 ~]# mmstartup -a

Fri Sep 29 06:11:32 CST 2017: mmstartup: Starting GPFS ...

[root@c01 ~]#

[root@c01 ~]# mmgetstate -aLs

# 重新创建文件系统

[root@c01 ~]# mmcrfs /gpfs1 /dev/gpfs1 -F /etc/gpfs/3node_nsd_input \

-B 256k -n 80 -v no -R 2 -M 2 -r 2 -m 2 -A yes -Q no

The following disks of gpfs1 will be formatted on node c01:

c01nsd01: size 20971520 KB

c02nsd01: size 20971520 KB

c03nsd01: size 20971520 KB

Formatting file system ...

Disks up to size 257 GB can be added to storage pool system.

Creating Inode File

Creating Allocation Maps

Creating Log Files

Clearing Inode Allocation Map

Clearing Block Allocation Map

Formatting Allocation Map for storage pool system

Completed creation of file system /dev/gpfs1.

mmcrfs: Propagating the cluster configuration data to all

affected nodes. This is an asynchronous process.

# 挂载所有节点

[root@c01 ~]# mmmount gpfs1 -a

Fri Sep 29 06:15:33 CST 2017: mmmount: Mounting file systems ...

[root@c01 ~]# df -h

Filesystem Size Used Avail Use% Mounted on

/dev/mapper/vg_c01-lv_root 18G 1.9G 15G 12% /

tmpfs 491M 0 491M 0% /dev/shm

/dev/sda1 477M 49M 404M 11% /boot

/dev/gpfs1 60G 754M 60G 2% /gpfs1

测试

# 在c03上写文件

[root@c03 ~]# df -h

Filesystem Size Used Avail Use% Mounted on

/dev/mapper/vg_c01-lv_root 18G 2.0G 15G 12% /

tmpfs 491M 0 491M 0% /dev/shm

/dev/sda1 477M 49M 404M 11% /boot

/dev/gpfs1 60G 16G 45G 26% /gpfs1

[root@c03 ~]# cd /gpfs1/

[root@c03 gpfs1]# date >> test1.txt

# c01上查看

[root@c01 ~]# cat /gpfs1/test1.txt

Fri Sep 29 06:16:36 CST 2017

3.升级gpfs

本次升级测试为3.5.0.3到3.5.0.34。升级过程中应该停止gpfs的服务,升级完成后再全部启动,升级步骤如下:

- 准备好升级包;

- 确认需要升级的节点的mmfs服务关闭;

- 使用rpm安装升级包;

- 重新编译;

- 依次在其他节点进行升级;

- 启动整个gpfs;

# check gpfs version

[root@c01 ~]# mmlsconfig

Configuration data for cluster 3NodeCluster.c01:

------------------------------------------------

myNodeConfigNumber 1

clusterName 3NodeCluster.c01

clusterId 5187696956017568988

autoload no

dmapiFileHandleSize 32

minReleaseLevel 3.5.0.3

adminMode central

File systems in cluster 3NodeCluster.c01:

-----------------------------------------

/dev/gpfs1

# download update software from

# https://www.ibm.com/support/home/product/U962793A86377G52/General_Parallel_File_System

# required ibm id

# 上传并解压升级包

[root@c01 ~]# mkdir gpfs_update

[root@c01 ~]# tar -zxvf GPFS-3.5.0.34-x86_64-Linux.tar.gz -C gpfs_update

changelog

gpfs.base_3.5.0-34_amd64_update.deb

gpfs.base-3.5.0-34.x86_64.update.rpm

gpfs.docs_3.5.0-34_all.deb

gpfs.docs-3.5.0-34.noarch.rpm

gpfs.gpl_3.5.0-34_all.deb

gpfs.gpl-3.5.0-34.noarch.rpm

gpfs.msg.en-us_3.5.0-34_all.deb

gpfs.msg.en_US-3.5.0-34.noarch.rpm

README

[root@c01 ~]# cd gpfs_update/

[root@c01 gpfs_update]# ls -l

total 29424

-rw-r--r-- 1 root root 283 Mar 23 2017 changelog

-rw-r--r-- 1 30007 bin 14003236 Mar 15 2017 gpfs.base_3.5.0-34_amd64_update.deb

-rw-r--r-- 1 30007 bin 14259235 Mar 15 2017 gpfs.base-3.5.0-34.x86_64.update.rpm

-rw-r--r-- 1 30007 bin 236234 Mar 15 2017 gpfs.docs_3.5.0-34_all.deb

-rw-r--r-- 1 30007 bin 254716 Mar 15 2017 gpfs.docs-3.5.0-34.noarch.rpm

-rw-r--r-- 1 30007 bin 555762 Mar 15 2017 gpfs.gpl_3.5.0-34_all.deb

-rw-r--r-- 1 30007 bin 579652 Mar 15 2017 gpfs.gpl-3.5.0-34.noarch.rpm

-rw-r--r-- 1 30007 bin 105508 Mar 15 2017 gpfs.msg.en-us_3.5.0-34_all.deb

-rw-r--r-- 1 30007 bin 107720 Mar 15 2017 gpfs.msg.en_US-3.5.0-34.noarch.rpm

-rw-r--r-- 1 root root 5674 Feb 8 2017 README

# 查看已安装的gpfs包

[root@c01 gpfs_update]# rpm -qa |grep gpfs

gpfs.base-3.5.0-3.x86_64

gpfs.docs-3.5.0-3.noarch

gpfs.msg.en_US-3.5.0-3.noarch

gpfs.gpl-3.5.0-3.noarch

# 停止这个节点的gpfs,并进行安装

[root@c01 gpfs_update]# mmshutdown -N c01

Sun Oct 1 10:12:57 CST 2017: mmshutdown: Starting force unmount of GPFS file systems

Sun Oct 1 10:13:02 CST 2017: mmshutdown: Shutting down GPFS daemons

c01: Shutting down!

c01: Unloading modules from /lib/modules/2.6.32-696.10.2.el6.x86_64/extra

Sun Oct 1 10:13:10 CST 2017: mmshutdown: Finished

[root@c01 gpfs_update]# rpm -ivhU gpfs.base-3.5.0-34.x86_64.update.rpm

[root@c01 gpfs_update]# rpm -ivhU gpfs.docs-3.5.0-34.noarch.rpm

[root@c01 gpfs_update]# rpm -ivhU gpfs.gpl-3.5.0-34.noarch.rpm

[root@c01 gpfs_update]# rpm -ivhU gpfs.msg.en_US-3.5.0-34.noarch.rpm

[root@c01 gpfs_update]# rpm -qa | grep gpfs

gpfs.docs-3.5.0-34.noarch

gpfs.gpl-3.5.0-34.noarch

gpfs.base-3.5.0-34.x86_64

gpfs.msg.en_US-3.5.0-34.noarch

# 依次在其他节点上进行升级即可。

# 全部升级完成之后

# 需要重新编译gpl

[root@c01 src]# make LINUX_DISTRIBUTION=REDHAT_AS_LINUX Autoconfig World InstallImages \

2>/tmp/make_err 1>/tmp/make_out

# 启动gpfs

[root@c01 ~]# mmstartup -a

Sun Oct 1 10:31:45 CST 2017: mmstartup: Starting GPFS ...

[root@c01 ~]# mmgetstate -a

Node number Node name GPFS state

------------------------------------------

1 c01 active

2 c02 active

3 c03 active

[root@c01 ~]# df

Filesystem 1K-blocks Used Available Use% Mounted on

/dev/mapper/vg_c01-lv_root 18003272 2067416 15014668 13% /

tmpfs 502056 0 502056 0% /dev/shm

/dev/sda1 487652 49322 412730 11% /boot

/dev/gpfs1 62914560 772096 62142464 2% /gpfs1

4.迁移磁盘

可以使用mmadddisk和mmdeldisk两个命令实现为gpfs集群更换新的nsd,在使用mmdeldisk进行删除nsd的时候 会自动进行数据的迁移,但是必须有足够的存储空间。主要步骤如下:

- 增加一个磁盘;

- 创建新的nsd;

- 加入集群中;

- 删除nsd;

# 这里为c01增加一个新的磁盘

[root@c01 ~]# fdisk -l | grep /dev

Disk /dev/sda: 21.5 GB, 21474836480 bytes

/dev/sda1 * 1 64 512000 83 Linux

/dev/sda2 64 2611 20458496 8e Linux LVM

Disk /dev/sdb: 21.5 GB, 21474836480 bytes

Disk /dev/sdc: 21.5 GB, 21474836480 bytes

# 创建新的nsd

[root@c01 ~]# echo "/dev/sdc:c01::dataAndMetadata:3001:c01nsd02" >/etc/gpfs/migrate_nsd_input

[root@c01 ~]# mmcrnsd -F /etc/gpfs/migrate_nsd_input

mmcrnsd: Processing disk sdc

mmcrnsd: Propagating the cluster configuration data to all

affected nodes. This is an asynchronous process.

[root@c01 ~]# mmlsnsd

File system Disk name NSD servers

---------------------------------------------------------------------------

gpfs1 c01nsd01 c01

gpfs1 c02nsd01 c02

gpfs1 c03nsd01 c03

(free disk) c01nsd02 c01

# 将nsd增加到gpfs1中

[root@c01 ~]# mmadddisk gpfs1 -F /etc/gpfs/migrate_nsd_input

Unable to open disk 'c01nsd02' on node c02.

No such device

Error processing disks.

runRemoteCommand: c02: tsadddisk /dev/gpfs1 -F /var/mmfs/tmp/tsddFile.mmadddisk.7352 failed.

mmadddisk: tsadddisk failed.

Verifying file system configuration information ...

mmadddisk: Propagating the cluster configuration data to all

affected nodes. This is an asynchronous process.

mmadddisk: Command failed. Examine previous error messages to determine cause.

[root@c01 gpfs1]# mmadddisk gpfs1 c01nsd02

The following disks of gpfs1 will be formatted on node c01:

c01nsd02: size 20971520 KB

Extending Allocation Map

Checking Allocation Map for storage pool system

Completed adding disks to file system gpfs1.

mmadddisk: Propagating the cluster configuration data to all

affected nodes. This is an asynchronous process.

[root@c01 gpfs1]#

[root@c01 gpfs1]#

[root@c01 gpfs1]# mmlsnsd

File system Disk name NSD servers

---------------------------------------------------------------------------

gpfs1 c01nsd01 c01

gpfs1 c02nsd01 c02

gpfs1 c03nsd01 c03

gpfs1 c01nsd02 c01

[root@c01 gpfs1]# mmdf gpfs1

disk disk size failure holds holds free KB free KB

name in KB group metadata data in full blocks in fragments

--------------- ------------- -------- -------- ----- -------------------- -------------------

Disks in storage pool: system (Maximum disk size allowed is 239 GB)

c01nsd02 20971520 -1 Yes Yes 20969216 (100%) 248 ( 0%)

c01nsd01 20971520 3001 Yes Yes 20713472 ( 99%) 488 ( 0%)

c02nsd01 20971520 3002 Yes Yes 20714496 ( 99%) 440 ( 0%)

c03nsd01 20971520 3003 Yes Yes 20714496 ( 99%) 456 ( 0%)

------------- -------------------- -------------------

(pool total) 83886080 83111680 ( 99%) 1632 ( 0%)

============= ==================== ===================

(total) 83886080 83111680 ( 99%) 1632 ( 0%)

Inode Information

-----------------

Number of used inodes: 4087

Number of free inodes: 81929

Number of allocated inodes: 86016

Maximum number of inodes: 86016

# 从gpfs1中删除其中一个nsd

[root@c01 gpfs1]# mmdeldisk

mmdeldisk: Missing arguments.

Usage:

mmdeldisk Device {"DiskName[;DiskName...]" | -F DiskFile} [-a] [-c]

[-m | -r | -b] [-N {Node[,Node...] | NodeFile | NodeClass}]

[root@c01 gpfs1]# mmdeldisk gpfs1 c01nsd01

Deleting disks ...

Scanning file system metadata, phase 1 ...

Scan completed successfully.

Scanning file system metadata, phase 2 ...

Scan completed successfully.

Scanning file system metadata, phase 3 ...

Scan completed successfully.

Scanning file system metadata, phase 4 ...

Scan completed successfully.

Scanning user file metadata ...

100.00 % complete on Tue Oct 3 14:42:18 2017

Scan completed successfully.

Could not invalidate disk(s).

Checking Allocation Map for storage pool system

tsdeldisk completed.

mmdeldisk: Propagating the cluster configuration data to all

affected nodes. This is an asynchronous process.

[root@c01 gpfs1]# mmlsnsd

File system Disk name NSD servers

---------------------------------------------------------------------------

gpfs1 c02nsd01 c02

gpfs1 c03nsd01 c03

gpfs1 c01nsd02 c01

(free disk) c01nsd01 c01

5.增加一个节点

为gpfs1集群增加一个客户端节点,主要步骤如下:

- 准备客户端操作系统等;

- 安装gpfs相关软件包;

- 增加在gpfs1集群中;

# 安装和其他节点相同版本的linux操作系统

# root用户远程ssh

[root@c04 ~]# cat /etc/ssh/sshd_config |grep Root

PermitRootLogin yes

[root@c04 ~]# service sshd restart

Stopping sshd: [ OK ]

Starting sshd: [ OK ]

# 安装一些准备的包

[root@c04 ~]# yum -y install ksh perl

[root@c04 ~]# yum -y install kernel kernel-headers kernel-devel

[root@c04 ~]# yum -y install cpp gcc gcc-c++

# 关闭selinux,完事重启

[root@c04 ~]# sed -i "s#SELINUX=enforcing#SELINUX=disabled#g" /etc/selinux/config

# 准备安装介质并安装

[root@c04 gpfs]# rpm -ivh *.rpm

Preparing... ########################################### [100%]

1:gpfs.base ########################################### [ 25%]

2:gpfs.gpl ########################################### [ 50%]

3:gpfs.msg.en_US ########################################### [ 75%]

4:gpfs.docs ########################################### [100%]

# 升级

[root@c04 gpfs]# cd ../gpfs_update/

[root@c04 gpfs_update]# rpm -ivhU *.rpm

Preparing... ########################################### [100%]

1:gpfs.base ########################################### [ 25%]

2:gpfs.gpl ########################################### [ 50%]

3:gpfs.msg.en_US ########################################### [ 75%]

4:gpfs.docs ########################################### [100%]

[root@c04 gpfs_update]# rpm -qa |grep gpfs

gpfs.base-3.5.0-34.x86_64

gpfs.msg.en_US-3.5.0-34.noarch

gpfs.gpl-3.5.0-34.noarch

gpfs.docs-3.5.0-34.noarch

# 编译&验证

[root@c04 src]# make LINUX_DISTRIBUTION=REDHAT_AS_LINUX Autoconfig World InstallImages

## 需要使用 kernel kernel-headers kernel-devel cpp gcc gcc-c++ 等安装包

[root@c04 src]# ls -l /lib/modules/2.6.32-696.10.3.el6.x86_64/extra/

total 6332

-rw-r--r-- 1 root root 2760629 Oct 1 23:09 mmfs26.ko

-rw-r--r-- 1 root root 2798374 Oct 1 23:09 mmfslinux.ko

-rw-r--r-- 1 root root 918694 Oct 1 23:09 tracedev.ko

# 修改host表,每个节点上均如下所示

[root@c04 src]# cat /etc/hosts |grep c0

10.10.1.173 c01

10.10.1.174 c02

10.10.1.175 c03

10.10.1.176 c04

# ssh互信

[root@c04 src]# ssh-keygen

[root@c04 src]# ssh-copy-id c01

[root@c04 src]# ssh-copy-id c02

[root@c04 src]# ssh-copy-id c03

[root@c04 src]# ssh-copy-id c04

[root@c01 src]# ssh-copy-id c04

[root@c02 src]# ssh-copy-id c04

[root@c03 src]# ssh-copy-id c04

# 将c04加入到cluster中,注意如果该节点是仲裁节点需要将整个集群关闭;

[root@c01 ~]# mmshutdown -a

...

# mmaddnode -N {NodeDesc[,NodeDesc...] | NodeFile}

[root@c01 ~]# mmaddnode -N c04:quorum

Sun Oct 1 12:35:00 CST 2017: mmaddnode: Processing node c04

Verifying GPFS is stopped on all nodes ...

mmaddnode: Command successfully completed

mmaddnode: Warning: Not all nodes have proper GPFS license designations.

Use the mmchlicense command to designate licenses as needed.

mmaddnode: Propagating the cluster configuration data to all

affected nodes. This is an asynchronous process.

[root@c01 ~]# mmchlicense server --accept -N c04

The following nodes will be designated as possessing GPFS server licenses:

c04

mmchlicense: Command successfully completed

mmchlicense: Propagating the cluster configuration data to all

affected nodes. This is an asynchronous process.

[root@c01 ~]# mmstartup -a

Sun Oct 1 12:37:21 CST 2017: mmstartup: Starting GPFS ...

等20s后:

[root@c01 ~]# mmgetstate -a

Node number Node name GPFS state

------------------------------------------

1 c01 active

2 c02 active

3 c03 active

4 c04 active

c04上可以看到文件系统

[root@c04 src]# df

Filesystem 1K-blocks Used Available Use% Mounted on

/dev/mapper/vg_c04-lv_root 18003272 1241608 15840476 8% /

tmpfs 502056 0 502056 0% /dev/shm

/dev/sda1 487652 51792 410260 12% /boot

/dev/gpfs1 62914560 772096 62142464 2% /gpfs1

[root@c04 src]# cd /gpfs1/

[root@c04 gpfs1]# ls -l

total 0

-rw-r--r-- 1 root root 111 Oct 1 05:44 test1.txt

[root@c04 ~]# cd /usr/lpp/mmfs/bin/

[root@c04 bin]# ./mmgetstate -aL

Node number Node name Quorum Nodes up Total nodes GPFS state Remarks

------------------------------------------------------------------------------------

1 c01 3 4 4 active quorum node

2 c02 3 4 4 active quorum node

3 c03 3 4 4 active quorum node

4 c04 3 4 4 active quorum node

# 修改c04的属性,改为普通节点

[root@c01 gpfs1]# mmchnode

mmchnode: Missing arguments.

Usage:

mmchnode change-options -N {Node[,Node...] | NodeFile | NodeClass}

or

mmchnode {-S Filename | --spec-file=Filename}

[root@c01 gpfs1]# mmchnode -N c04 --nonquorum

Tue Oct 3 14:55:49 CST 2017: mmchnode: Processing node c04

mmchnode: Propagating the cluster configuration data to all

affected nodes. This is an asynchronous process.

[root@c01 gpfs1]# mmgetstate -aL

Node number Node name Quorum Nodes up Total nodes GPFS state Remarks

------------------------------------------------------------------------------------

1 c01 2 3 4 active quorum node

2 c02 2 3 4 active quorum node

3 c03 2 3 4 active quorum node

4 c04 2 3 4 active

6.删除文件系统

# 在所有节点上umount gpfs文件系统

[root@c01 ~]# mmumount /dev/gpfs1

Tue Oct 3 16:04:51 CST 2017: mmumount: Unmounting file systems ...

# 删除文件系统,将导致nsd上的数据清除

[root@c01 ~]# mmdelfs /dev/gpfs1

All data on the following disks of gpfs1 will be destroyed:

c02nsd01

c03nsd01

c01nsd02

Completed deletion of file system /dev/gpfs1.

mmdelfs: Propagating the cluster configuration data to all

affected nodes. This is an asynchronous process.

[root@c01 ~]# mmgetstate -aL

Node number Node name Quorum Nodes up Total nodes GPFS state Remarks

------------------------------------------------------------------------------------

1 c01 2 3 3 active quorum node

2 c02 2 3 3 active quorum node

3 c03 2 3 3 active quorum node

[root@c01 ~]# mmlsnsd

File system Disk name NSD servers

---------------------------------------------------------------------------

(free disk) c01nsd01 c01

(free disk) c01nsd02 c01

(free disk) c02nsd01 c02

(free disk) c03nsd01 c03

# 所有nsd都处于free disk的状态;